Introduction

Recently I was presented with a challenge at one of my customers. We were setting up a new, completely isolated environment with its own Active Directory forest, PKI, ADFS, hypervisors and supporting infrastructure: we had to build pretty much everything from scratch. Since the customer is quite security oriented and an external vendor were to get access to this environment to install an application platform, we also agreed to use the Active Directory Tiering concept that Microsoft promotes to ensure that we appropriately protect domain controllers and CAs. Yes yes, I know, there is a successor to the Tiering concept now in the form of management planes, but since this was an internal only environment that didn’t apply.

So, aside from the challenge of setting up everything from scratch, this article is focused on another topic: remote authentication. Business requirements dictated the use of MFA for the environment, and having worked a lot with smart cards in the past I suggested we use YubiKeys since they are a modern, feature-rich alternative to smart cards. With the YubiKey 5 NFC you can use all 20 slots intended for retired certificate management in addition to the single Authentication slot, so we had ample space for certificates.

Also because of business requirements, the PKI had to be ECC P384 instead of RSA, so the Root CA and all Issuing CAs were all using Elliptic Curves for the CA certificates. Of course, this doesn’t put a limitation on the end-entity certificates – you can still use RSA for those, but I figured, why not use ECC all the way?

In addition, for security reasons, the domain controllers and CAs were not accessible from the regular internal network. To get access to the sensitive parts of the environment, you had to first log on to a jump host using RDP in a “DMZ” in between the regular internal network and this environment, where the jump host was joined to the new forest we were setting up. This is important to understand from a topology perspective, as Remote Desktop utilizes Network Level Authentication (NLA).

Network Level Authentication (NLA)

Steve Syfuhs, a developer at Microsoft, has written some good articles on NLA, why you should use it and how Remote Desktop Authentication works, so I’ll just give a brief explanation of how it works and its purpose. It was introduced in Windows XP SP3 / Server 2008.

In the above scenario we’re addressing the commonplace scenario, where you log on using RDP to a server in the internal network and you have full connectivity to at least one domain controller.

- First, the client opens an RDP connection to the server as usual.

- The server responds that it wants NLA, and sends its Ticket-Granting Ticket (TGT) to the client.

- The client resolves a domain controller in the target server’s domain, sends the TGT in a TGS-REQ to the domain controller, and receives a TGS-REP.

- The client uses the newly minted TGS to authenticate using Kerberos to the target server over the RDP channel, and the server authenticates back to the client (AP-REQ/AP-REP exchange).

- The client now sends its credentials to the server (username and password or certificate) to authenticate locally on the server, so that the user can get a TGT.

So what NLA does here is to protect your credentials until it is absolutely sure that the target server is actually the one it claims to be, which it does using the Kerberos protocol. If you turn off NLA, then you send your credentials over the RDP channel directly to the server: if someone intercepted your DNS query for the server’s IP address or somehow managed to modify your local hosts file, you could be sending your plaintext credentials to a completely different server and you’d be none the wiser. Boom, compromised account.

“But hang on”, you might interject, “the server still has a certificate that it presents to the client before you connect!”

Yes. Yes it does. But, to be honest here, most people have conditioned themselves to simply ignore certificate warnings. I’m not saying it’s a good thing, just the honest truth. How many of you would actually take the time to check the validity of the certificate, not to mention put in the time needed to properly configure the RDP service to use a trusted certificate?

So. NLA is there for a reason, and that is to protect your credentials because we are all humans and humans suck at security.

NLA and isolated environments

In the previous scenario, we covered the base scenario of using NLA and RDP in an corporate environment, where everyone is part of the same or trusted domains, and everything just works nicely. However, this is not always the case. In some scenarios, you need to use RDP to connect to a server in an isolated or remote environment where you don’t necessarily have access to all the target infrastructure components.

Consider the following scenario, as outlined in the introduction:

In this case, we are using a client that can RDP to the target server, but cannot communicate with a domain controller in the target server’s domain. This is not a unique scenario; whenever you set up a lab environment using Azure Virtual Machines and RDP to the servers from the Internet (assuming you don’t use ExpressRoute or VPN) you’re in the same situation:

One of the prerequisites of NLA is that you need line of sight to a domain controller in the target server’s domain, otherwise the client can’t perform the necessary Kerberos exchanges to securely authenticate to the server before the connection is established.

“But”, you might claim, “I’ve set up multiple Azure environments and I never had any issue connecting to those servers!”

Well, as Steve outlines in his article on Remote Desktop, if the client cannot resolve a domain controller in the target server’s domain, it falls back to NTLM as in pretty much all other cases. This happens transparently, which is why you most likely never notice it, and it sends those NTLM credentials to the target server which then passes it on to a domain controller.

Adding certificates and MFA to the mix

Alright, so now that we’ve outlined the basic concepts and scenarios, lets dig a little deeper. I mentioned in the introduction that business requirements dictated MFA, and that we went with YubiKeys and certificate authentication. So what happens when you introduce certificate authentication?

Well, for starters, NTLM authentication is no longer possible. The only method of authenticating towards Active Directory using smart card certificates is via the Kerberos PKINIT extension as defined by [MS-PKCA] and RFC 4556 (in addition to the LDAP StartTLS client certificate authentication outlined in my previous article, but that is out of scope here). Since the target servers in a Remote Desktop environment are based on Windows and authentication is based on Active Directory, we are now restricted to the Kerberos protocol. Unfortunately (or maybe fortunately?) this means that the previously mentioned NTLM fallback if the client cannot locate a domain controller no longer works. As a result, if you try to connect to a server using RDP with certificate authentication, you get this (rather ominous) error message:

Now, of course, even if you can locate a domain controller you still need to configure some stuff on the local client before any certificate authentication works. First off, all the domain controllers in the target server’s domain needs a KDC Authentication certificate. How you configure this is out of scope of this article, so suffice to say that that part is taken care of. The client needs to trust those certificates, so you’d need to import the entire CA chain to your local machine certificate store. In my lab, I have a two-tier CA hierarchy with a Root CA and an Issuing CA, so the commands to import these on the local machine would be something like this:

certutil -addstore Root "C:\temp\Contoso Root CA 1.crt"

certutil -addstore CA "C:\temp\Contoso Isssuing CA 1.crt"Of course, you could also distribute these through Group Policy.

Next up, since we are authenticating with certificates to the domain controllers, the domain controllers will want to authenticate back to the client using the KDC Authentication certificate. The client performs a check to see if the issuer of the KDC certificate is trusted in the special NTAuth store, which it won’t be since the client is not a member of the target server’s domain. So to tell the client that it needs to trust the KDC certificate, we also need to import the Issuing CA to the Enterprise NTAuth store:

certutil -enterprise -addstore NTAuth "C:\temp\Contoso Issuing CA 1.crt"As far as I can tell, you can’t distribute certificates to the NTAuth store easily through Group Policy, so this would need to be a local configuration on the client. You could distribute a batch file to simplify the install as well. Normally, NTAuth certificates are provisioned from the NTAuthCertificates object in Active Directory, but if you’ve read my previous post, you’d know that any CA certificate added to that object will open you up for full forest compromise, so please don’t do that.

So now that we trust the PKI on the target server side, can we authenticate to the target server yet? Well, no. We still don’t have connectivity to a domain controller, so we need something that allows us to do that.

Introducing KDC Proxy

As it turns out, Microsoft provides a Windows service specifically for the purpose of proxying Kerberos requests from an unsecure network to domain controllers. This is called the KDC Proxy Service (KPS), and it was introduced as a supporting service for Direct Access and Remote Desktop Gateway deployments, but it can be used without any of those. Steve, being a great guy, has written an article on this as well. Also, through a friend and colleague of mine, I found out that Steve wanted me to clarify that KDC Proxy only has official Microsoft support when used specifically with Direct Access or RDP Gateway, so bear that in mind.

Basically, it is a HTTP service that listens on port 443 and that passes on Kerberos requests from an extranet to the intranet. To configure this service, you need a Server Authentication certificate and a server with port 443 exposed to the Internet or internal network, depending on where your clients are located. In my case, since we were using ADFS, I had a Web Application Proxy (WAP) server in the “DMZ”, so I just configured the KPS on that server. Normally, you’d not domain join a WAP, but in our case we only exposed it to the internal network, so we figured it would make life easier for the admins to be able to manage it the same way as all other servers. It is a little unclear whether the KPS server requires domain membership, but I’d assume that just having connectivity to a domain controller (for the purposes of DCLocator and Kerberos) is enough.

First off, you need a Server Authentication certificate. I won’t go over how to acquire one; I’ll leave that to you. Just remember to include the KPS FQDN in the Subject Alternative Name extension. It needs to be in the Local Machine Personal store:

Then, using an elevated PowerShell prompt, you can install and set up the KPS with this script:

Install-WindowsFeature -Name Web-Scripting-Tools, Web-Mgmt-Console

Import-Module -Name WebAdministration

$KpsFqdn = "kps.contoso.com"

$KpsPort = 443

$UrlAclCommand = 'netsh http add urlacl url=https://+:{0}/KdcProxy user="NT AUTHORITY\Network Service"' -f $KpsPort

cmd /c $UrlAclCommand

$Cert = Get-ChildItem -Path Cert:\LocalMachine\My | Where-Object -FilterScript { $_.Subject -like "*$KpsFqdn*" }

$Guid = [Guid]::NewGuid().ToString("B")

$SslCertCommand = 'netsh http add sslcert hostnameport={0}:{1} certhash={2} appid={3} certstorename=MY' -f $KpsFqdn, $KpsPort, $Cert.Thumbprint, $Guid

cmd /c $SslCertCommand

New-ItemProperty -Path HKLM:\SYSTEM\CurrentControlSet\Services\KPSSVC\Settings -Name HttpsClientAuth -Type Dword -Value 0x1 -Force

New-ItemProperty -Path HKLM:\SYSTEM\CurrentControlSet\Services\KPSSVC\Settings -Name DisallowUnprotectedPasswordAuth -Type Dword -Value 0x1 -Force

New-ItemProperty -Path HKLM:\SYSTEM\CurrentControlSet\Services\KPSSVC\Settings -Name HttpsUrlGroup -Type MultiString -Value "+:$KpsPort" -Force

Set-Service -Name KPSSVC -StartupType Automatic

Start-Service -Name KPSSVC

New-NetFirewallRule -DisplayName "Allow KDCProxy TCP $KpsPort" -Direction Inbound -Protocol TCP -LocalPort $KpsPort

Just change the $KpsFqdn and $KpsPort to whatever applies to your setup. The certificate will be found automatically granted that the KPS FQDN is part of the subject in the certificate. Naturally, the KPS FQDN must also be resolvable from outside the environment. The HttpsClientAuth registry value is set to 0x1 to indicate that we want to use certificate authentication.

So, now that the KPS is configured, can we log on to the server now? Well, not yet; we need to configure some client settings as well.

Configuring the KDC Proxy client

Any Windows client will always use DCLocator to find a domain controller for Kerberos exchanges before falling back to other options. KDC Proxy is not a standard fallback method, so the clients need to be aware that a KDC Proxy exists for a target domain if the DCLocator procedure fails. Fortunately this can be configured through Group Policy.

This will tell Kerberos clients that for the Kerberos realm “corp.contoso.com”, use the KDC Proxy server “kps.contoso.com” if a domain controller cannot be located. If you’re using a different port, you can update the value to match:

<https kps.contoso.com:17443:KdcProxy />You’re not restricted to a single KDC Proxy either, you can add multiple hosts (and ports):

<https kps1.contoso.com:17443:KdcProxy kps2.contoso.com:18443:KdcProxy kps3.contoso.com:1443:KdcProxy />For our purposes though, let’s just assume that we are using a single host. Remember that you probably need to reboot the client before the settings are applied. While you’re at it, enable ECC certificate logon as well:

So, are we finally ready to log on now? Well, you need to be able to fetch the CRLs of the CAs as well. I won’t go over how to do this since it heavily depends on your environment, but you can create a new application in WAP, for instance. I did so, and it works perfectly.

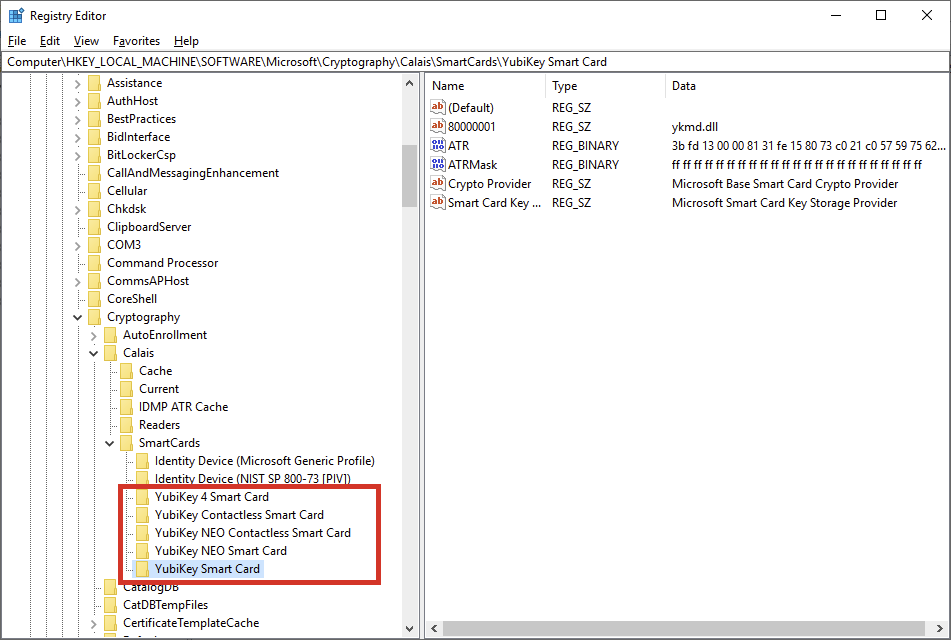

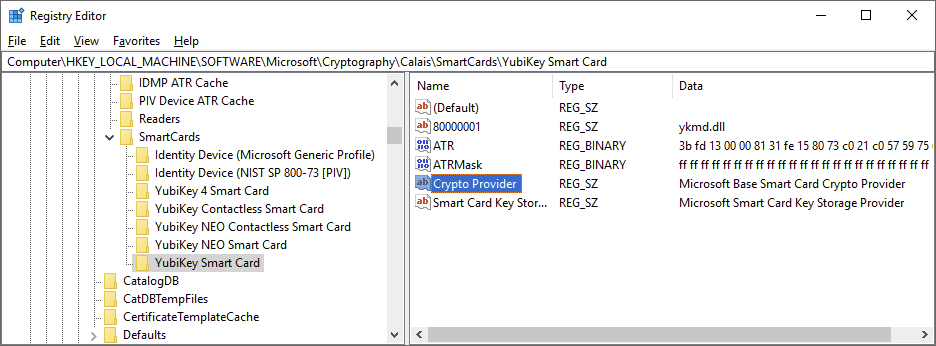

If you, like me, are using YubiKeys for authentication, then you also need to install the YubiCo minidriver on both the client and server. One thing that took me a while to figure out is that simply installing the MSI with no parameters isn’t enough, and you will want to install the minidriver using the INSTALL_LEGACY_NODE=1 flag, which adds the following registry keys and entries:

Without these entries, the Windows Smartcard Subsystem will fall back to the generic PIV driver (Identity Device) which won’t work for remote servers. YubiCo has an article on this, but for the sake of simplicity I’ll add the command line here as well:

msiexec /i YubiKey-Minidriver-4.1.0.172-x64.msi INSTALL_LEGACY_NODE=1 /quietYou need to update the file name to whatever version you are using, of course.

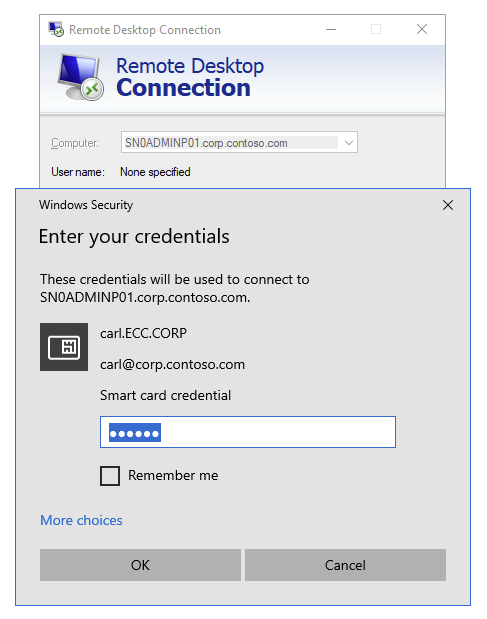

Attempting to log on

So naturally, I’d need a client certificate on my YubiKey to log on. How to enroll certificates to a YubiKey is out of scope of this article, but I’ll try to cover that in a future post.

Anyway, since I mentioned earlier that the entire PKI was based on ECC, I figured I might as well use ECC for the smartcard certificates as well.

So let’s try it, shall we?

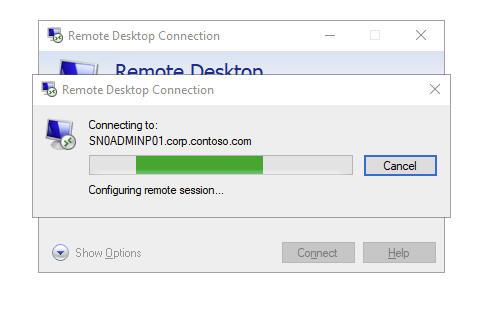

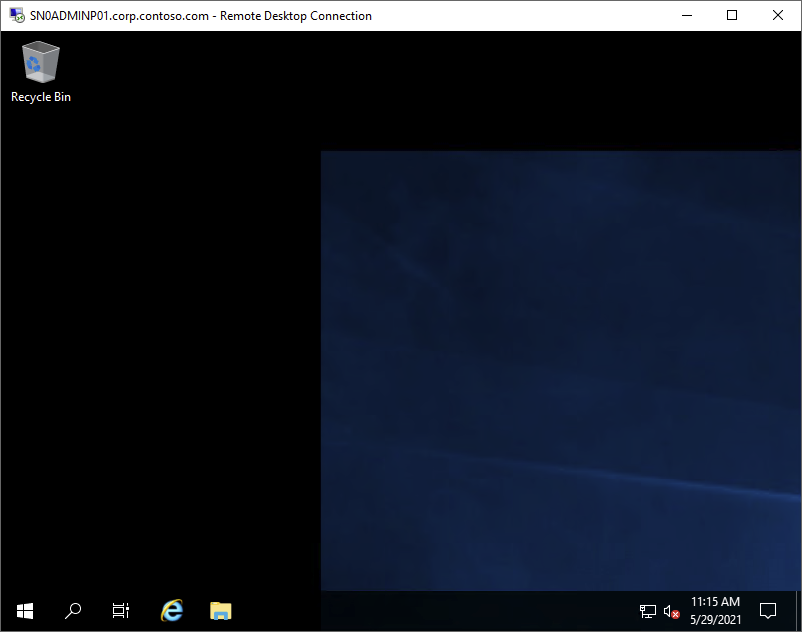

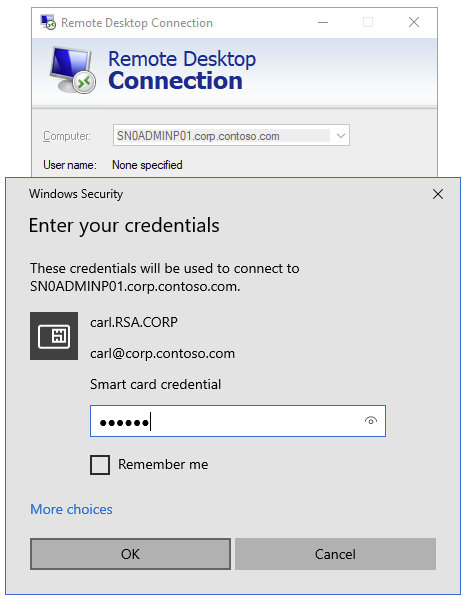

Success! We managed to log on to a remote host without connectivity to a domain controller, instead using the KDC Proxy. Looking at the event log on the WAP server we can see that the request was received and proxied to a KDC:

So, it looks like everything works as expected, problem solved. Right?

Adding ADFS to the equation

When I started testing this I figured ECC is vastly superior to RSA in almost every way: more secure, shorter keys, quicker computations, and so on. So everyone should use it! But, in our design we also wanted to allow access to certain infrastructure components via ADFS, using the same accounts as for logon to the jump hosts. From earlier deployments, I knew that ADFS only supports CSP for certificates, but I figured that it might be only for the actual certificates that ADFS uses, not for certificate authentication? Boy was I wrong. I tried to log on to ADFS using my new ECC certificate and this was the result:

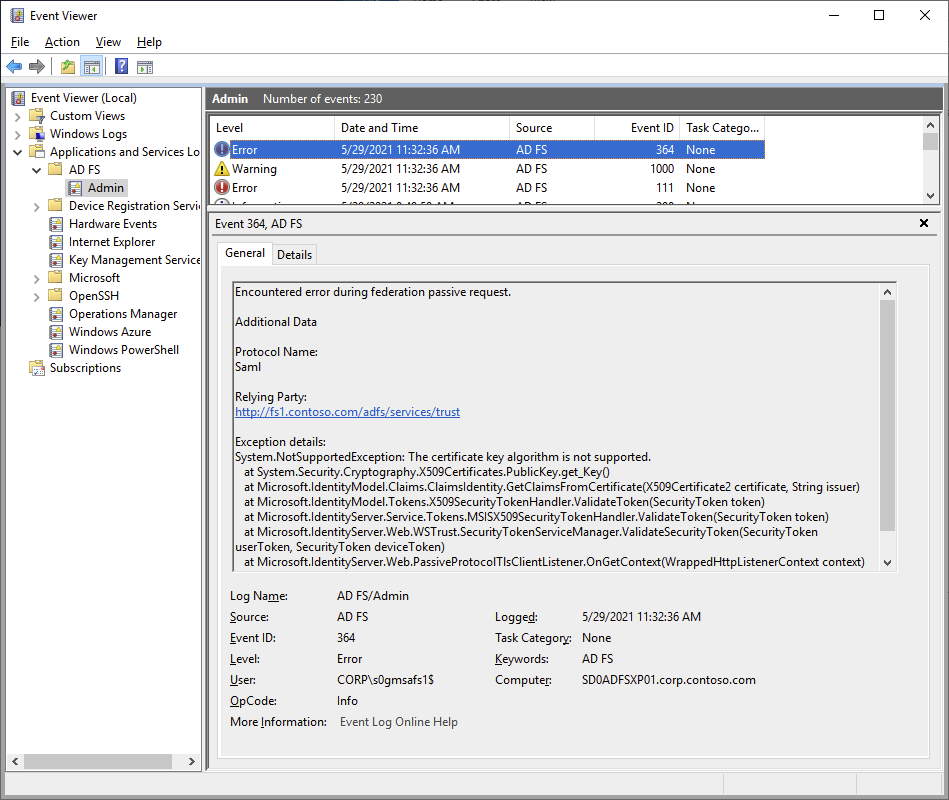

So I logged on to ADFS and checked the event log:

Well, so ECC isn’t supported. Actually, ADFS doesn’t support any algorithm that runs on the Key Storage Provider (KSP), and ECC happens to be one of them.

Alright, back to the drawing board. So we can probably forget about ECC, then. But having a full ECC PKI doesn’t mean that we can’t use RSA on the end entity certificates, so I just went ahead and issued an RSA certificate too and tried to log on to ADFS again:

So this actually works with ADFS now. Let’s try logging on RDP through the KDCProxy using the RSA certificate as well:

Wait, what?! It worked just a second ago! Time to put on the troubleshooting gloves.

Finding the root cause

Let’s start by checking the KDC Proxy logs on the WAP server:

No new entries since my last successful logon. Are we even using the KDCProxy? Let’s start up WireShark on the WAP server where KPS is used:

So we can see that there are incoming KPS traffic and that there is apparently a handshake going on and succeeding, but the connection is then quickly reset after a few handshake messages. Something fishy is going on… and of course, there were a thousand different things involved here that could be the culprit: the smartcard subsystem, the YubiCo minidriver, Kerberos settings, the KDCProxy, the SSL Binding settings, SCHANNEL, something in the RDP client or in the RDP listener, CAPI/CAPI2, the smart card KSP/CSP and so on.

On a fluke, I found that there was a special Kerberos event log called Security-Kerberos on the client:

Hmmm, so the TLS connection is established, but it could’t send the proxy request. None of the error codes provided me with any useful information though, so I had to keep looking. I figured I could look into the SCHANNEL logs to see if something suspicious happened there, but I had to enable those logs first which can be done by setting this registry value on the client to 0x7:

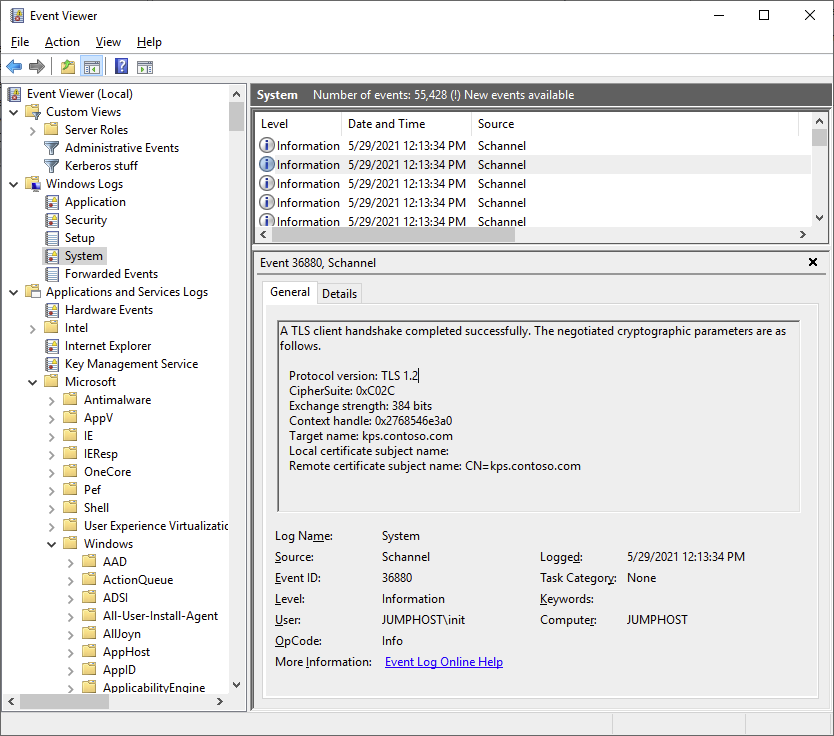

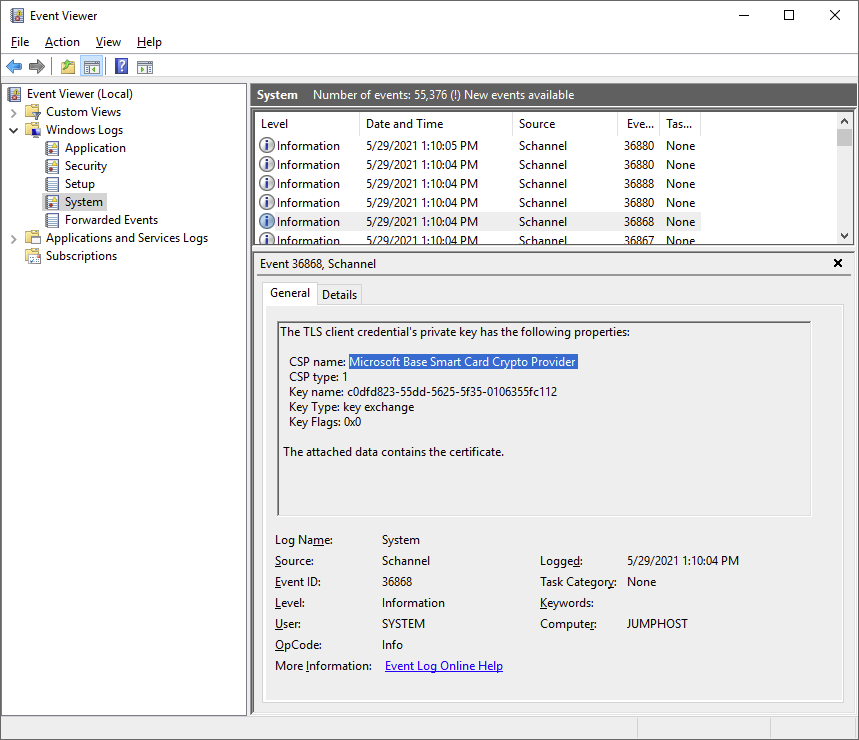

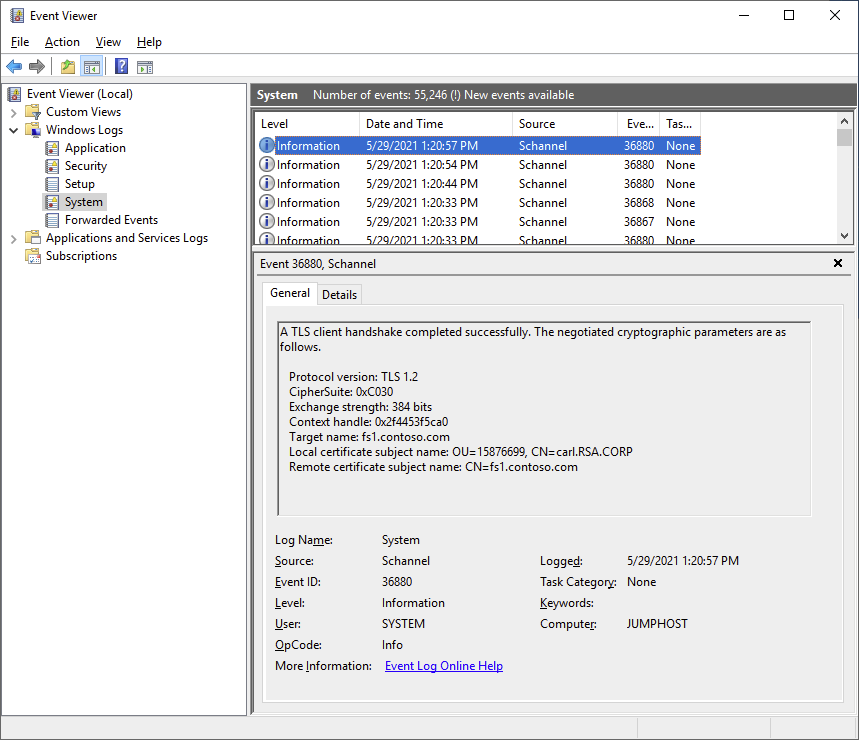

After that, SCHANNEL will log lots of events to the System log. I found some interesting stuff:

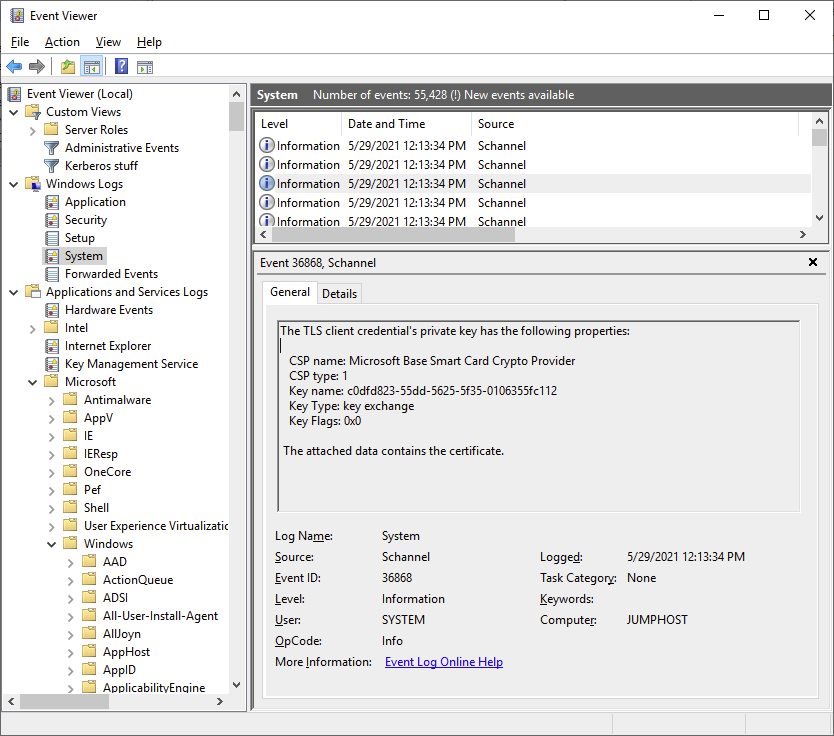

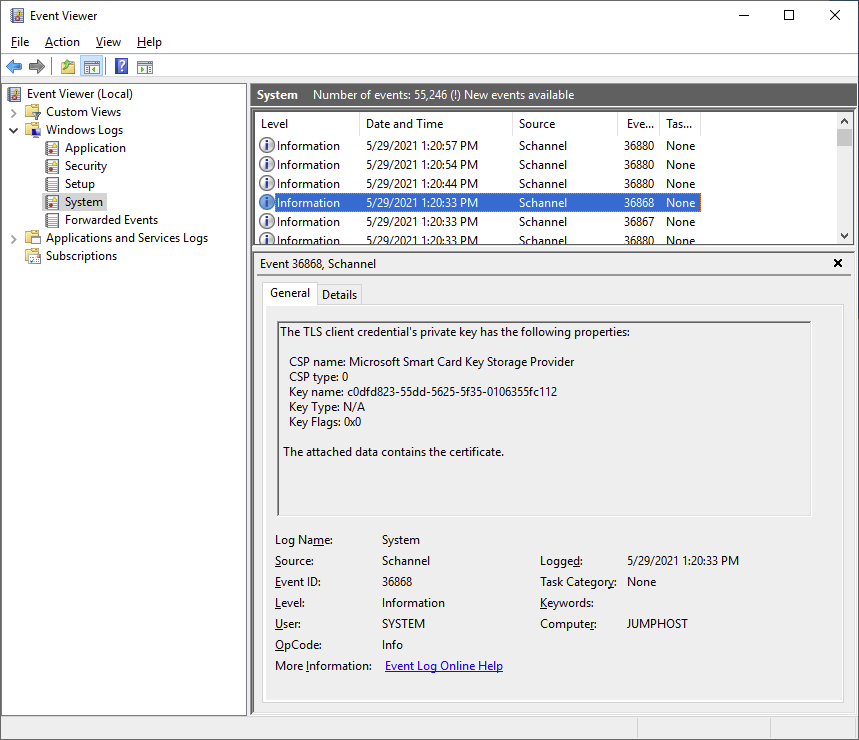

So a TLS “fatal alert” was generated by the client, we can also see that it does find the certificate and that the key container matches the one on the YubiKey:

So nothing seems to be wrong with the certificate. Except, the last successful logon was using the ECC certificate, and not the RSA one. Let’s try that again and see what we can find in the logs:

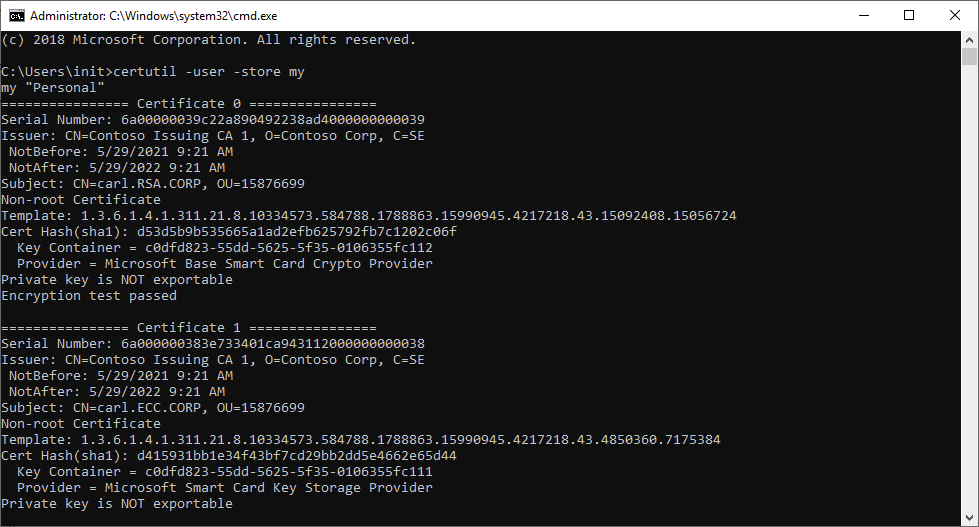

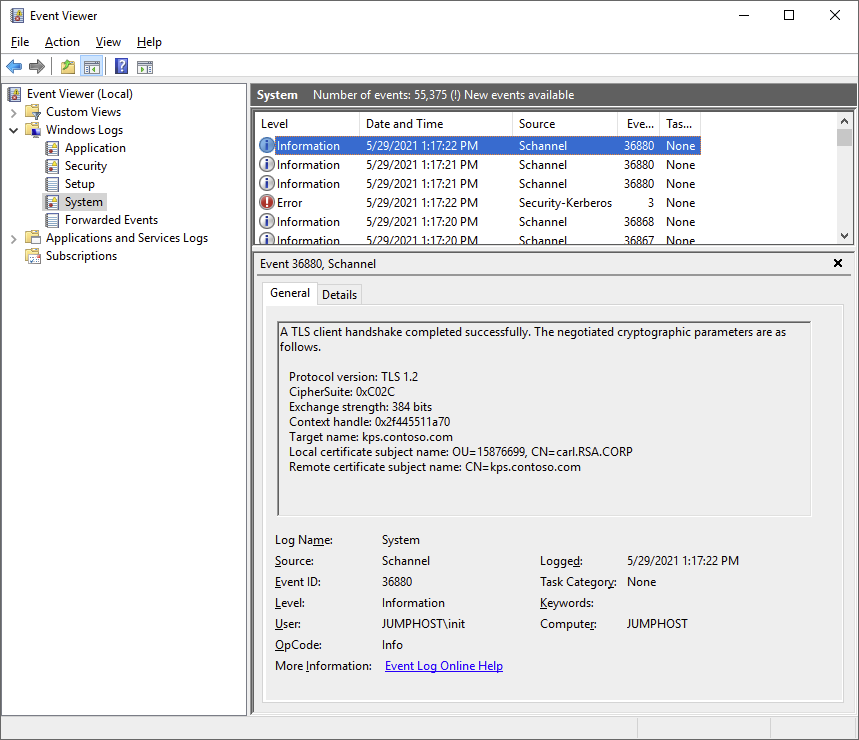

The log on works, and we can see that a connection to the KDC Proxy was successful. We can also see that the TLS handshake now has a client certificate in addition to the server certificate – it appears that when SCHANNEL establishes the TLS handshake with the KDC Proxy it fails to read the RSA key but not the ECC one. There is another difference as well, while the ECC key is read using the Microsoft Smart Card Key Storage Provider (KSP), the RSA key is read using the Microsoft Base Smart Card Crypto Provider (CSP). From a Windows perspective, this is completely counterintuitive as basically all Windows components supports RSA and the CSP, but not all support the KSP.

Interestingly, CAPI2 can actually read smart card key containers for RSA keys using both the legacy CSP and the modern KSP but seems to favor the CSP, while for ECC only the KSP is available. This can be seen in the output of the certutil -scinfo command, where the smartcard subsystem first makes a call to the CSP for the RSA certificate, followed by a call to the KSP:

Could it be that SCHANNEL have trouble using RSA keys through the CSP? Naaah, that can’t be it, right? I figure there would be so many other things that wouldn’t work if that was the case. But maybe it is the case here, in the combination with YubiKeys and the Yubikey minidriver.

Maybe we could force SCHANNEL to use the KSP for RSA keys as well? I couldn’t find any official Microsoft documentation on how to do so, but having messed around in the registry many many times I figured I could probably do it anyway.

Back to the Calais key where the YubiKey minidriver is announced to the system. We can see here that there are two values, one for the CSP and one for the KSP.

So I just deleted the Crypto Provider entry. Poof!

Now most Windows stuff doesn’t realize that something has changed until after a reboot, but I gave it a go anyway:

Well, it didn’t work, and SCHANNEL still uses the CSP as we can see here:

Let’s try rebooting the client, try again and see what happens.

This time, it works! Looking at the event logs we can see the following:

Now we’re using the KSP instead of the CSP for the RSA key, and now the connection succeeds. I also tried to log in to ADFS again which also still works:

Problem solved! We can now use an RSA key pair for both RDP, ADFS and KDC Proxy.

Solution feasibility

So whenever you find a solution to a problem or issue, you should consider its feasability. The KDC Proxy is a supported feature by Microsoft, so no question there. Using YubiKeys is definitely feasible, or they would have went out of business long ago. Installing a minidriver with an additional switch? Feasible, and can be automated or added to base client images. Client GPO settings? Sure, no problem.

But… directly interfering with the registry and the smartcard subsystem? Removing that entry now causes the following error in certutil -scinfo:

It still works with the KSP, but we have now introduced a subtle error for which we cannot know the impact. In fact, once during my testing, removing that entry actually caused the RDP certificate selection dialog to not be able to read the RSA certificate at all, and it appears inconsistent. Consider the poor fellow unfamiliar with this concept trying to troubleshoot this in the future. It may be fine for a lab setup, but I would strongly advise against implementing it in any production environment.

What I ended up doing instead was to simply issue two certificates per account, one with an ECC key and one with a RSA key. So for my “carl” account I would have a “carl.ECC.CORP” and a “carl.RSA.CORP” certificate on the same YubiKey. My issuance procedure that I used to provision the certificates to the YubiKey allowed me to define custom subject names, which makes it easy to distinguish between them as well. So whenever someone needs to log on through RDP, they use the ECC certificate, and for the infrastructure components using ADFS as an identity provider they use the RSA certificate instead. It’s a little clunky, but it is supported and we do not have to do any undue modifications to core Windows settings to make it work.

Summary and conclusion

Let’s start with a short summary on what needs to be done on the KDC Proxy server:

- Acquire a Server Authentication certificate

- Configure the KDC Proxy service

- Ensure connectivity to at least one KDC

- Allow outside access to the KDC Proxy HTTP port

On the client:

- Add the CA certificates of the target environment to the local machine store

- Add the issuer CA certificate of the domain controllers’ KDC certificate to the local enterprise NTAuth store

- Ensure that the KDC Proxy server is accessible, and that the DNS name is resolvable (either through DNS or the local hosts file)

- Ensure that the URLs in the KPS and KDC certificates’ CDP extension can be resolved, and that the CRLs can be downloaded for the client to perform a revocation check (you can publish an application in WAP for this if you wish)

- Ensure that the RDP target host FQDN is resolvable (either through DNS or the local hosts file)

- Configure KDC Proxy client settings through GPO

- Configure ECC certificate logon

- Install the YubiKey Minidriver

I have yet to figure out why the KSP works with KDC Proxy but not CSP. My findings forces me to conclude that this is a bug on either Microsoft’s or YubiCo’s side; I have not tested with other smartcards or tokens simply because I don’t have any. I’m leaning towards a bug on YubiCo’s side however, since I figure that a lot of other stuff wouldn’t work if it was on Microsoft’s side.

One thing to remember here as well is that you need to keep your eyes open on the validity of the KDC Proxy certificate. If it expires, no RDP authentication will work anymore.

A short note on versions used to end with:

- The operating system used for all servers/clients is Windows Server 2019 with the latest updates as of 2021-05-25.

- The YubiCo Minidriver is version 4.1.1.210-x64.

- The YubiKeys used are YubiKey 5 NFC version 5.27.

Until next time!

Thanks for such a great article.

We’re looking to use this to enable NLA whilst doing some security hardening.

We’re having an issue with something similar and were hoping you would be able to help..

We have an old 2016 and a new 2019 rd farm. We use smart cards (although not YubiKeys).

On the 2016 farm everything works fine for domain and non-domain joined machines

on the 2019 farm, domain joined machines works fine, but non-domain joined machines can’t authenticate to rdweb with smart cards (testing with passwords is successful). I’ve edited the web.config in rdweb\pages to allow smartcard etc

for non-domain machines on the new 2019 rdweb – it just keeps asking for authentication, until it states the user is not authorised. The same user is used for domain and non-domain tests, both tests are successful on the 2016 farm)

Any help would be greatly appreciated

Kind regards

Andrew

LikeLiked by 1 person

Thanks, it cleared a lot of my knowledge gaps! Excellent work.

I am studying a similar POC to give third parties accesses with MFA. I am testing a recent feature: starting July 21 Azure Virtual Desktops can be authenticated with Azure AD and ADFS. The landing page is authenticated with AzureAD (MFA, Conditional Access…etc.) . The remote desktop sessions/apps are authenticated by ADFS and it is possible to configure ADFS to ask for a second factor to Azure-Authenticator App (or configure OTP as first-unique factor, a perfect balance between security and easiness).https://docs.microsoft.com/en-us/azure/virtual-desktop/configure-adfs-sso.

LikeLike

about issuer of the KDC certificate must be NTAuth store – on DC and on machine, where we do login – yes. but on machine from which we do RDP/NLA – no. KDC certificate must be trusted but not need be in NTAuth

LikeLike

more exactly this depend from KERBEROS_MACHINE_ROLE.

if this KerbRoleRealmlessWksta or KerbRoleStandalone – CERT_CHAIN_POLICY_BASE used

if this KerbRoleWorkstation or KerbRoleDomainController and was reboot since joined this role – CERT_CHAIN_POLICY_NT_AUTH used

LikeLike

Interesting. Probably because the local NTAuth store doesn’t exist on non-domain joined machines, I would assume. I rarely work with standalone servers or clients, so I just assumed that most people were on a domain-joined client. Thanks for the addition!

LikeLike

NTAuth store exist on any windows ( at HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\EnterpriseCertificates\NTAuth\Certificates ). i visa versa 99%+ time work on non-domain joined machines ( KerbRoleRealmlessWksta ). recently i search how resove problem with NLA with smart card login. i login from non-domain joined machine with smart card (certificate) to domain joined. the KDC machine is visible from here, but because machine not joined to domain – domain name (realm) can not be resoved ( DsGetDcName failed for realm from certificate ) simply add this name to hosts file also not help – DsGetDcName do dns query for DNS_TYPE_SRV, but from hosts records created for DNS_TYPE_AAAA and DNS_TYPE_A only. so i search for solution and found your article, which help me. despite i not use kdc proxy – found another solution – use “ksetup /addkdc ” for direct resolve realm to ip.

about ntauth policy – view this in code ( KerbCheckKdcCertificate ) – before call CertVerifyCertificateChainPolicy – CERT_CHAIN_POLICY_BASE or CERT_CHAIN_POLICY_NT_AUTH selected, based on KerbGlobalRole and fRebootedSinceJoin

LikeLike

Hi great post! I tried similar but on logon client reports it cannot validate the crl. It keeps trying the ldap crl.

LikeLike

Do you have a HTTP URL in the CDP extension of the certificate as well, or only LDAP? If you’re using this guide to authenticate between environments, you probably can’t connect to LDAP in the target domain/forest to verify the CRL, and you’d need to publish it using HTTP. In my case, I published the internal CDPs using WAP as a reverse proxy.

LikeLike

Hi, i think i forgot the AIA. Will try to add a http on that too and load news certs on the yubikey and give it another try.

LikeLike

Thanks for a great article!

I’m trying to create a similar environment as in this article and i came across it when searching for why my smart cards weren’t working. So I set up the kdcproxy as you described and it works if i use the option “kdcproxyname:s:” in the rdp file. So for example setting it to “kdcproxyname:s:kdcproxy.contoso.tld:444” it works but I cannot get it to work when using the GPO setting. I cannot figure out why and I don’t seem to find any good information on how to troubleshoot it. What I can see in Wireshark is that when using the rdp-file, I get traffic to the kdcproxy but but not when removing the value. So my guess is that it’s not getting the realm right as it isn’t using the kdcproxy as the settings says. Or maybe it’s something else.

Do you have any suggestions?

LikeLike

Are you using the FQDN of the target server? As I mention in the article, the Kerberos client cannot use the KDC Proxy GPO settings unless the FQDN of the target server name matches a configured realm in the GPO. Simply using the hostname of the target server in mstsc won’t work.

LikeLike

Thank you for replying!

I’m using FQDN to the environment.

rdgw: rdgw.ot.contoso.tld

kdcproxy on the same server as rdgw but on port 444 so: rdgw.ot.contoso.tld:444

server behind the rdgw: server1.ot.contoso.tld

Username: testuser@ot.contoso.tld

Gpo setting: “.ot.contoso.tld” -> ”

mstsc rdgw: rdgw.ot.contoso.tld

mstsc computer: server1.ot.contoso.tld

The computer that I’m using to trying connecting from is domainjoined to ad.contoso.tld which is a domain that has no trusts or anything else that is shared but the contoso.tld in the name and hense the dns-namespace.

I don’t see anything wrong in this setup =(

LikeLike

Try removing the leading period in the GPO setting, i.e. “ot.contoso.tld” instead of “.ot.contoso.tld”. Also, i don’t see the actual value in your comment, might be because of the tags.

LikeLike

Hi any idea?

LikeLike

Thanks for replying! Greatly appreciated!

Yes, it’s likely the tags. the value is “>https rdgw.ot.contoso.tld:444:KdcProxy />” (The first char is reversed so hopefully it survives)

I’ve tried adding 3 realms with the same value.

“.ot.contoso.tld”

“ot.contoso.tld”

“server1.ot.contoso.tld”

But it isn’t working =(

LikeLike

Hi I have the crl working now and the root have been added both to the smartcard as to the machine.

– Certificate

[ fileRef] 70D2B4D7763116F6B86A08FB7FFE3F00218F6434.cer

[ subjectName] PAW.lab.local

– SignatureAlgorithm

[ oid] 1.2.840.113549.1.1.11

[ hashName] SHA256

[ publicKeyName] RSA

– PublicKeyAlgorithm

[ oid] 1.2.840.113549.1.1.1

[ publicKeyName] RSA

[ publicKeyLength] 2048

– TrustStatus

– ErrorStatus

[ value] 20

[ CERT_TRUST_IS_UNTRUSTED_ROOT] true

– InfoStatus

[ value] 10C

[ CERT_TRUST_HAS_NAME_MATCH_ISSUER] true

[ CERT_TRUST_IS_SELF_SIGNED] true

[ CERT_TRUST_HAS_PREFERRED_ISSUER] true

– ApplicationUsage

– Usage

[ oid] 1.3.6.1.5.5.7.3.1

[ name] Server Authentication

– IssuanceUsage

[ any] true

– EventAuxInfo

[ ProcessName] lsass.exe

– CorrelationAuxInfo

[ TaskId] {3DC810BD-7E2E-4F70-B580-9DD62639694F}

[ SeqNumber] 3

+ Result A certificate chain processed, but terminated in a root certificate which is not trusted by the trust provider.

[ value] 800B0109

LikeLike